Abstract

Key Contributions

- A geometry-aware token merging strategy for 3D point cloud transformers

- Dynamic token reduction algorithm based on globally informed graph

- Significant computational efficiency gains while preserving geometric fidelity

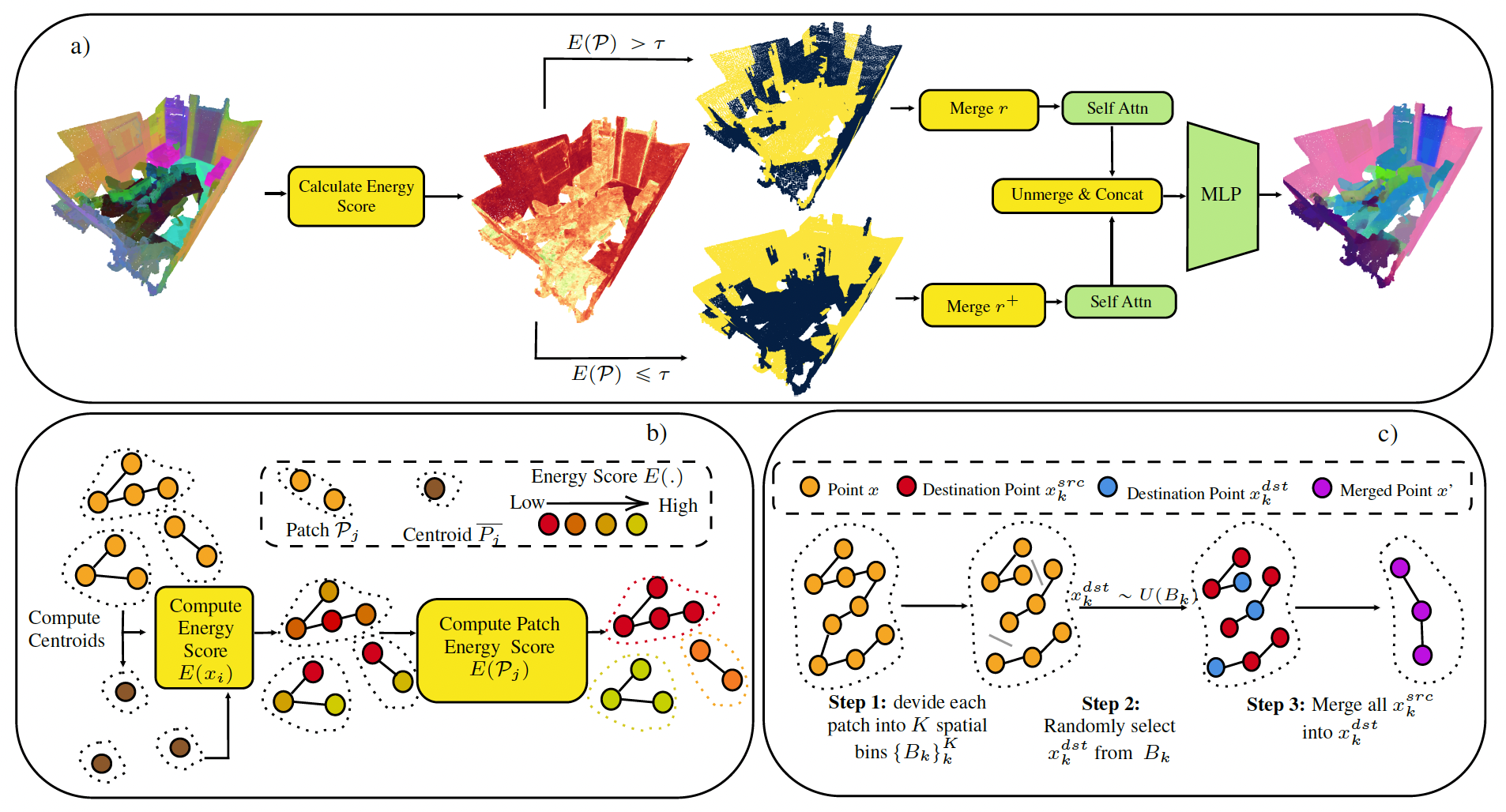

Method Overview

a) For each Point Transformer layer, we compute global-informed energy scores, which are later used to calculate patch-level energy scores. b) These patch-level scores guide adaptive merging, retaining more information for high-energy patches. c) Each patch is divided into evenly sized bins, and destination tokens are randomly selected within these bins to enable spatially aware merging.

Insights

3D point cloud tokens are highly redundant!

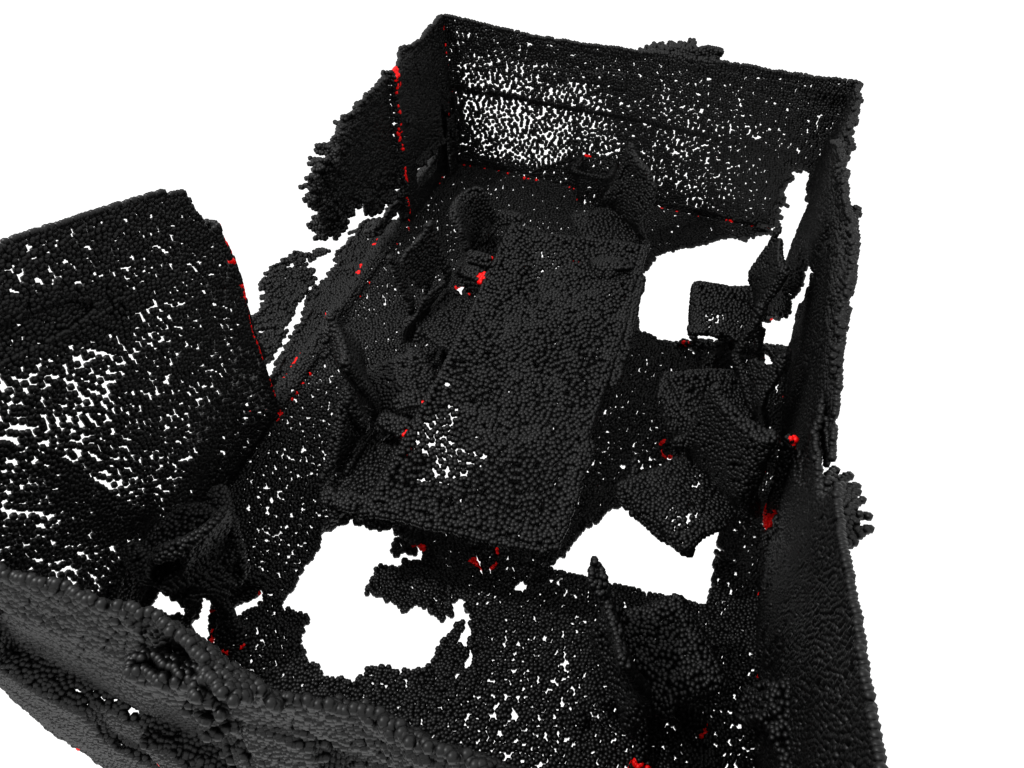

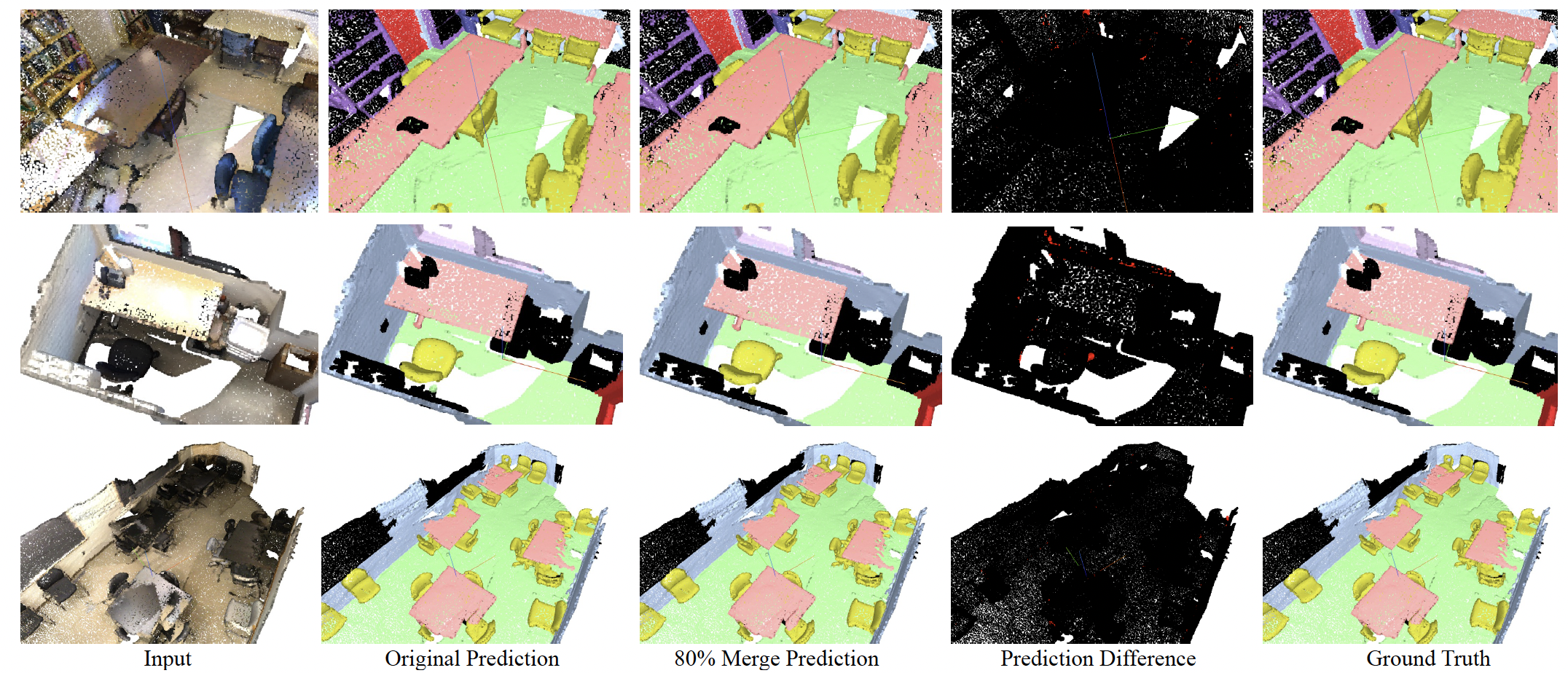

Observation: After merging 90% of the tokens in each attention layer, the attention visualization and predictions remain nearly identical to the original (5% merge baseline). The difference map (rightmost) shows minimal changes in red, demonstrating that there is high redundancy in the point cloud processing model.

Layer Visualization Videos

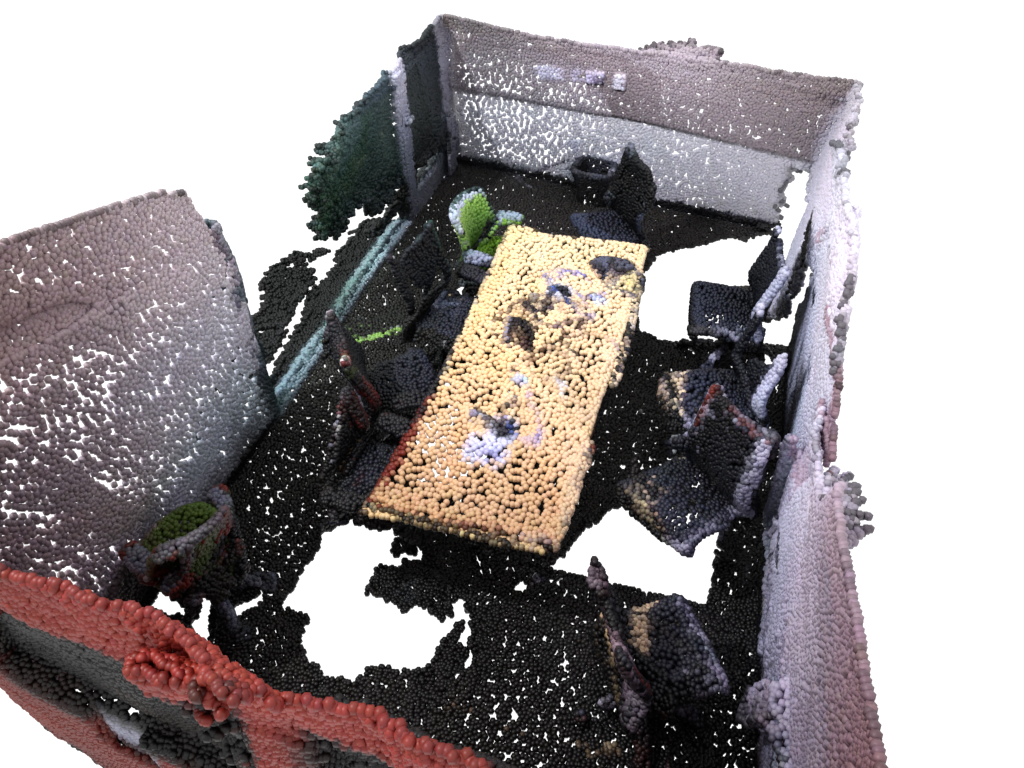

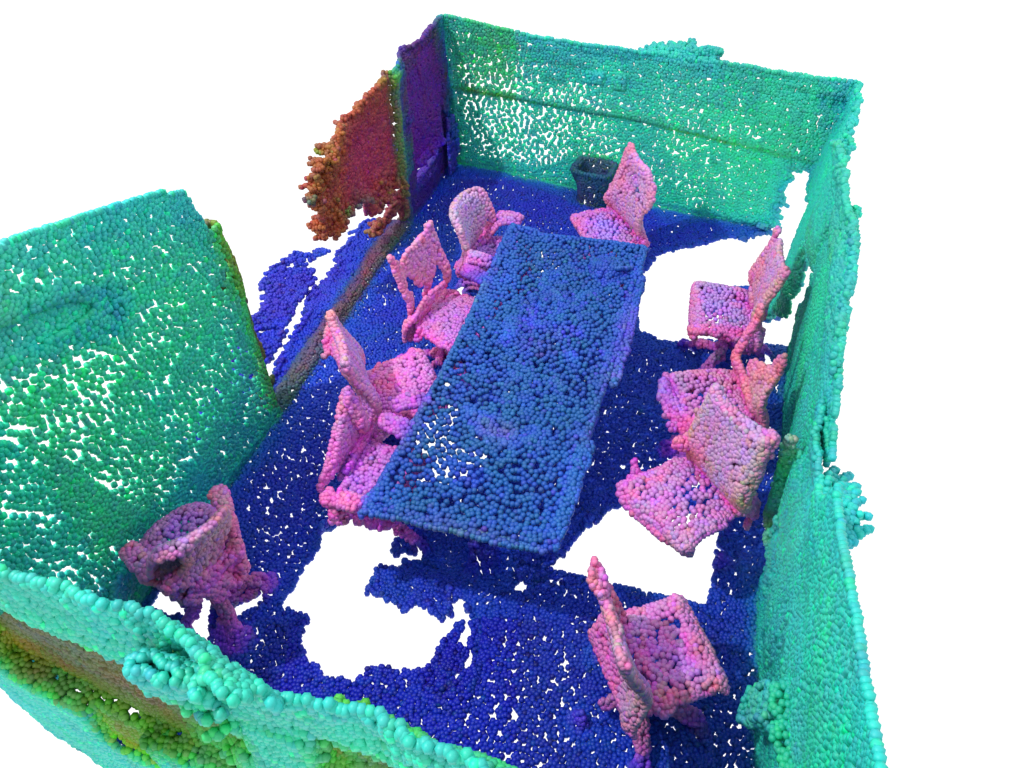

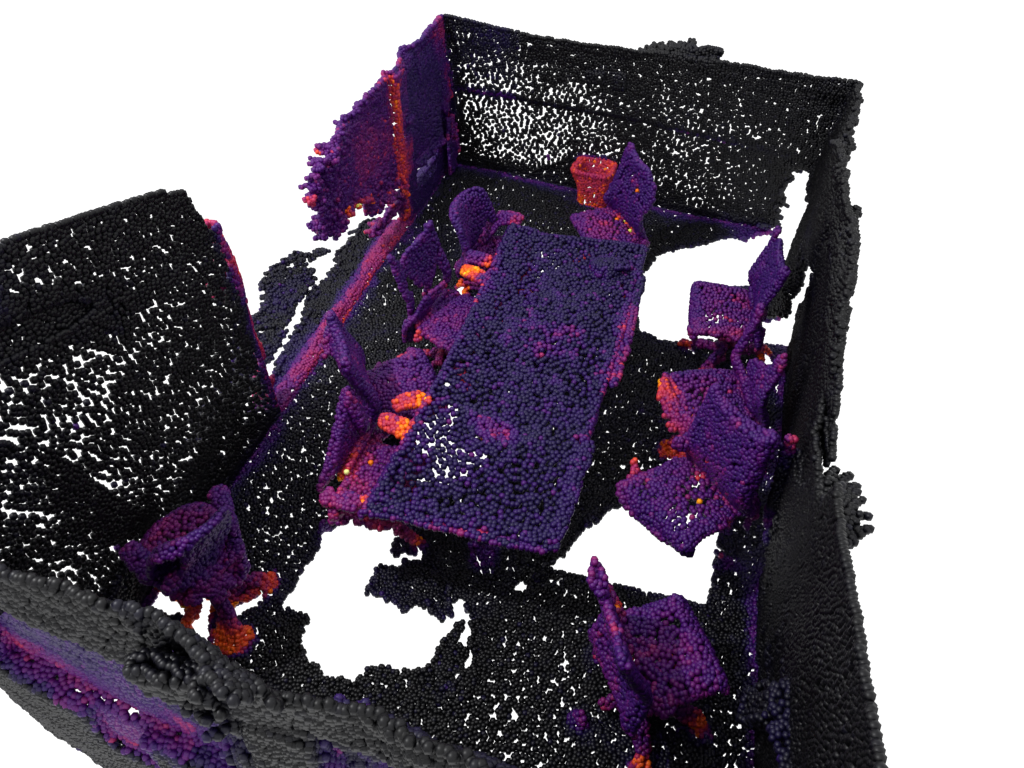

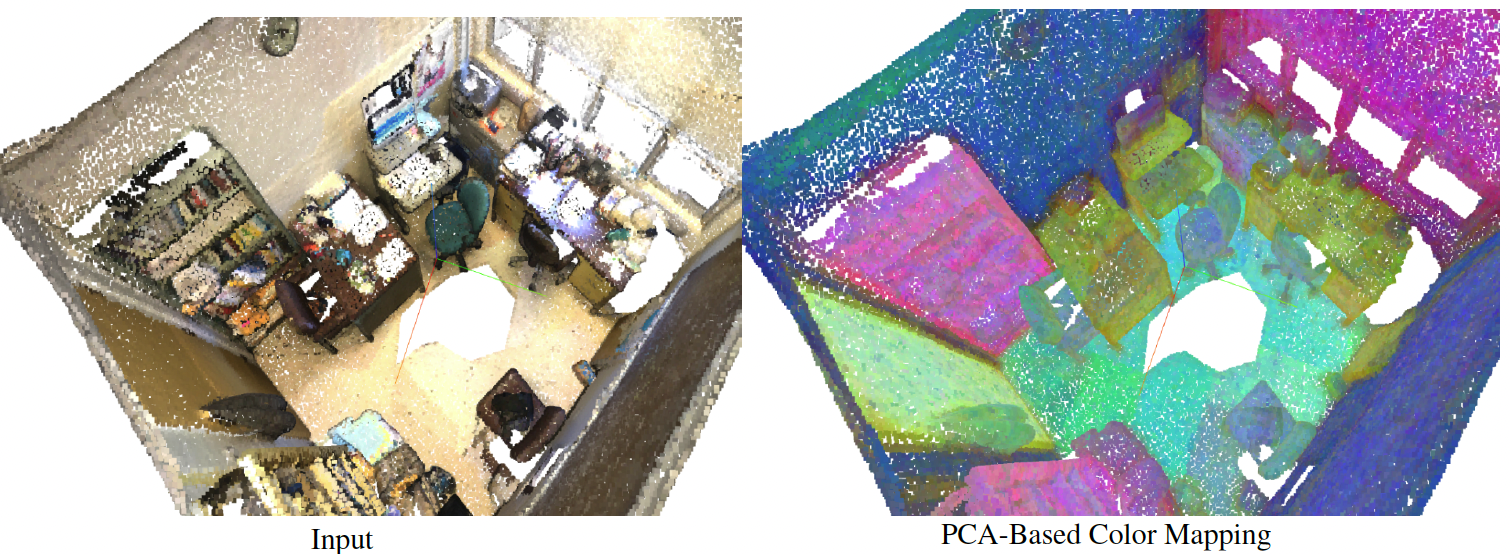

Visualization of PCA visualization of layer 20, 21 and Energy calculation at different merge rates. Despite merged to 90%, features of objects in the room remain distinctive, especially in layer 21, where representation of the features remains unchanged despite the aggressive token reduction.

PCA Feat. (Layer 20)

PCA Feat. (Layer 21)

Energy (Layer 21)

Interactive 3D Point Cloud Visualizer

Explore the token merging effects on a real 3D scene from ScanNet. This interactive visualizer shows the original point cloud and the results after applying our GitMerge3D method.

Interactive Demo: Use mouse controls to navigate the 3D scene. Compare different layers and attention heads to see how our token merging preserves important geometric features while reducing computational complexity.

Results

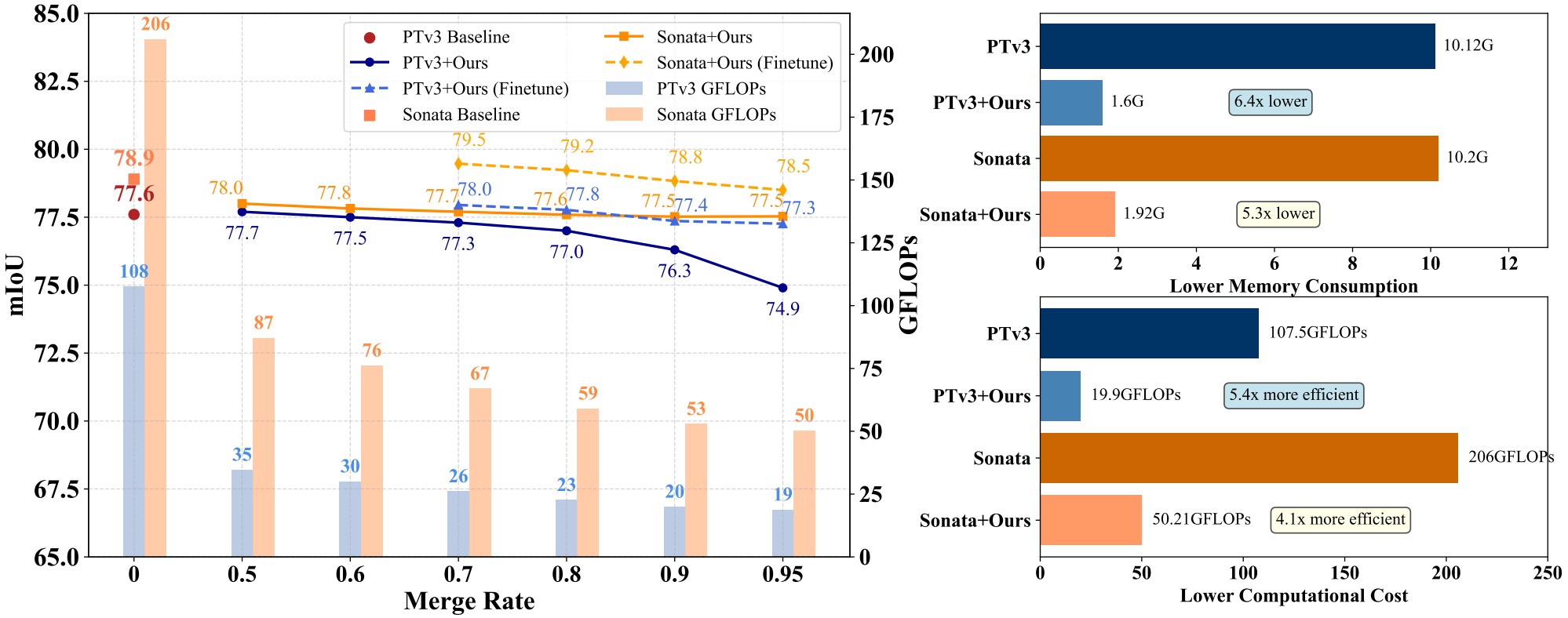

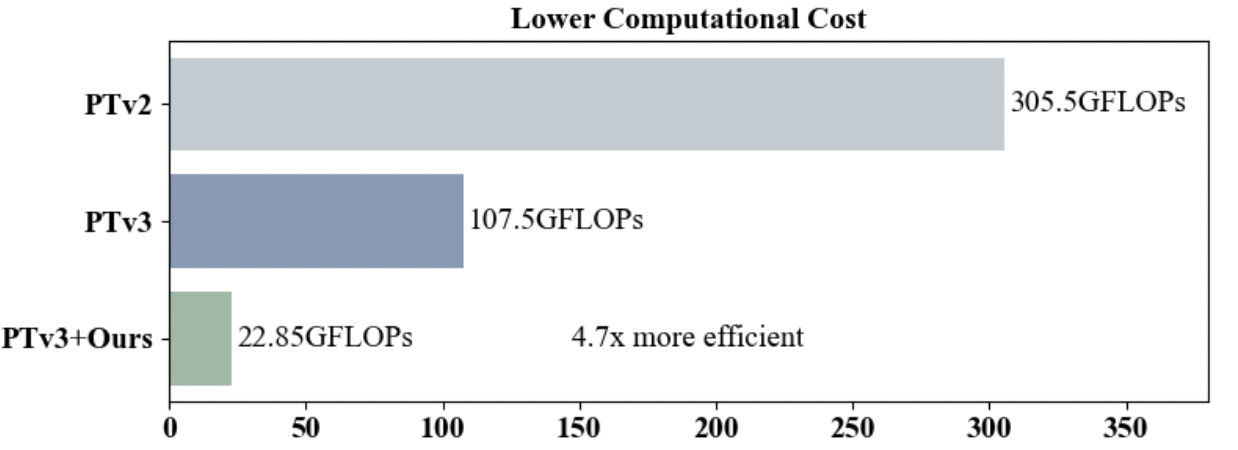

Computational Efficiency

GitMerge3D achieves up to 21% in computational cost while maintaining with minimal change in accuracy on point cloud tasks.

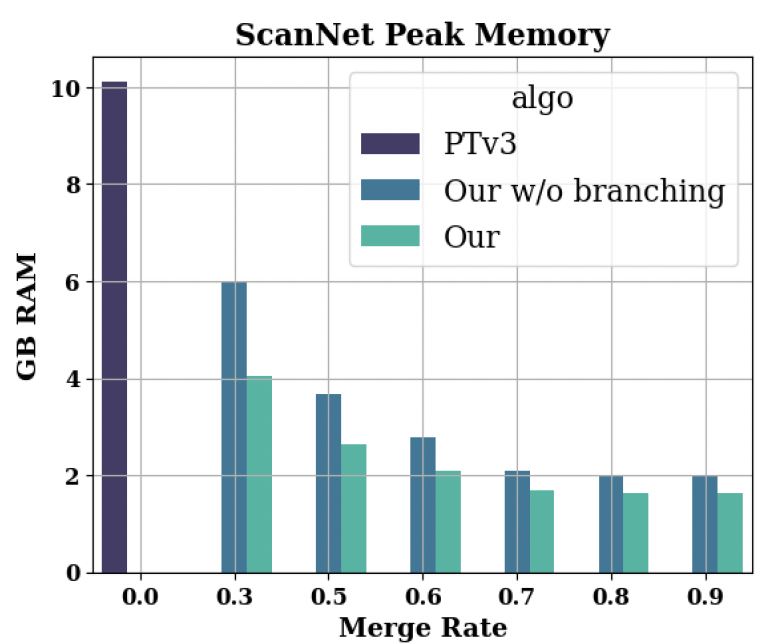

Memory Optimization

Our token merging strategy reduces peak memory usage significantly during inference, enabling processing of larger point clouds on resource-constrained devices.

Feature Preservation

GitMerge3D maintains critical geometric features even with aggressive token reduction.

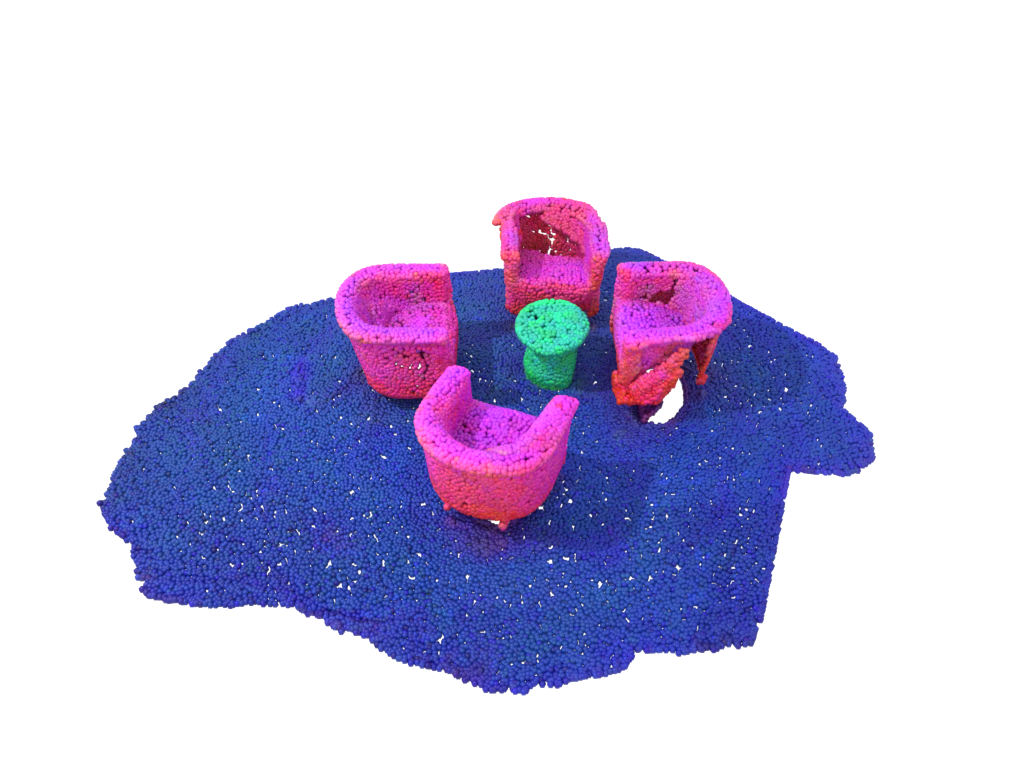

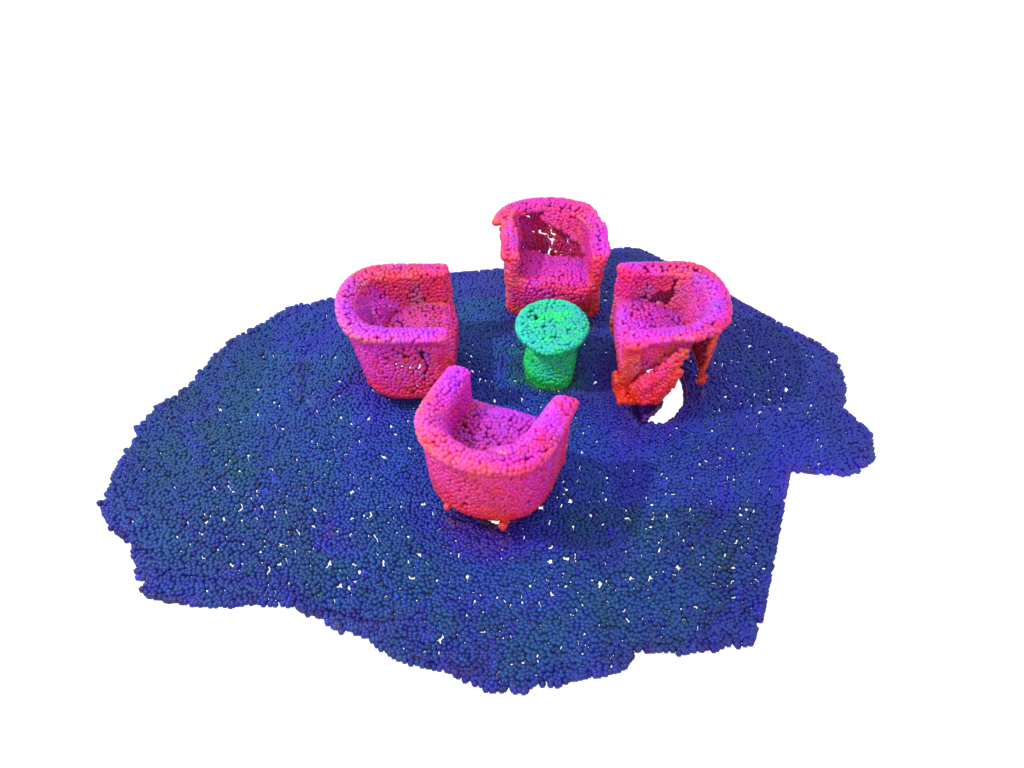

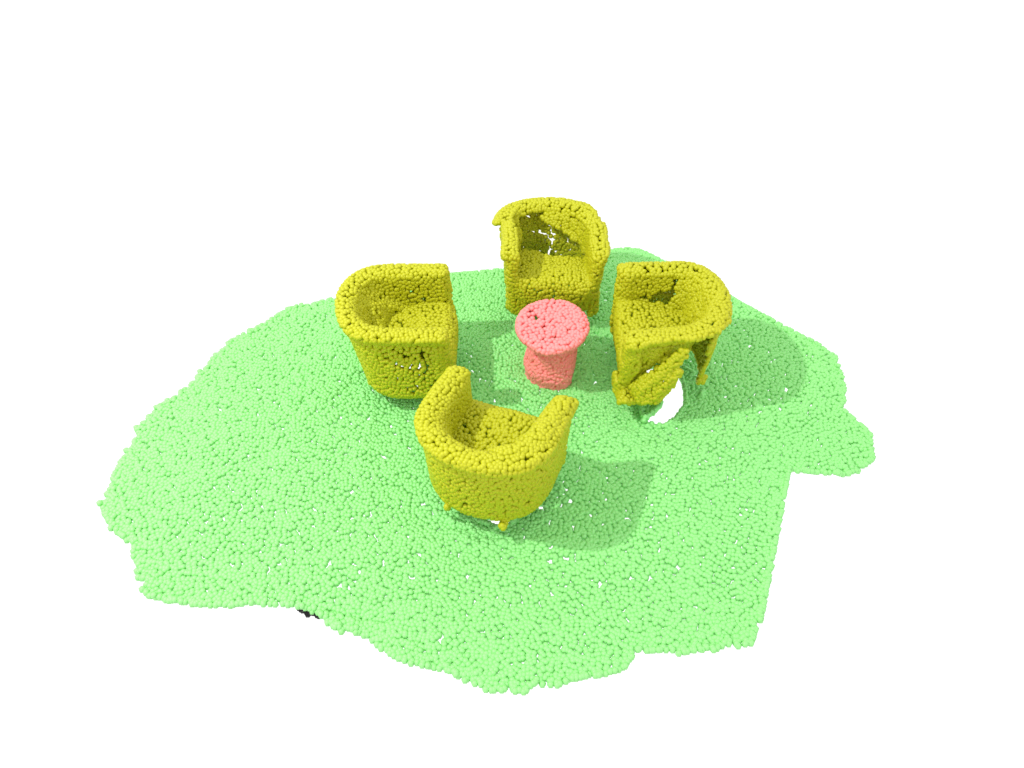

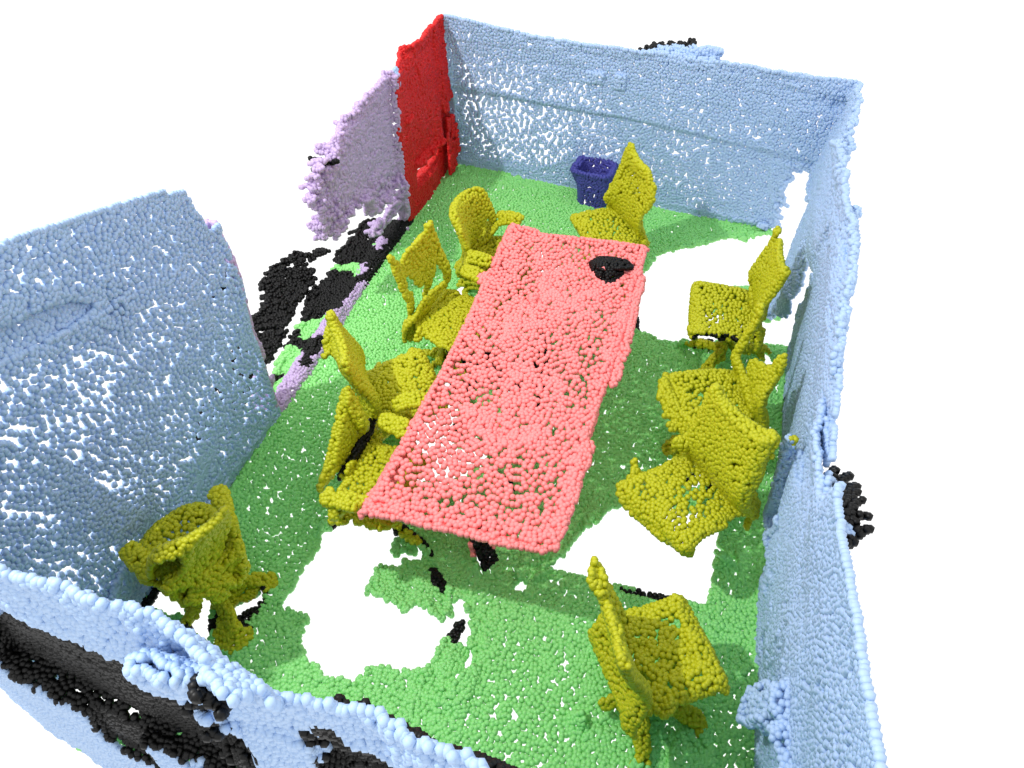

Illustration of ScanNet segmentation results with and without our merging method. As shown in the fourth column, the differences - highlighted in red - are limited to only a few points among hundreds of thousands.

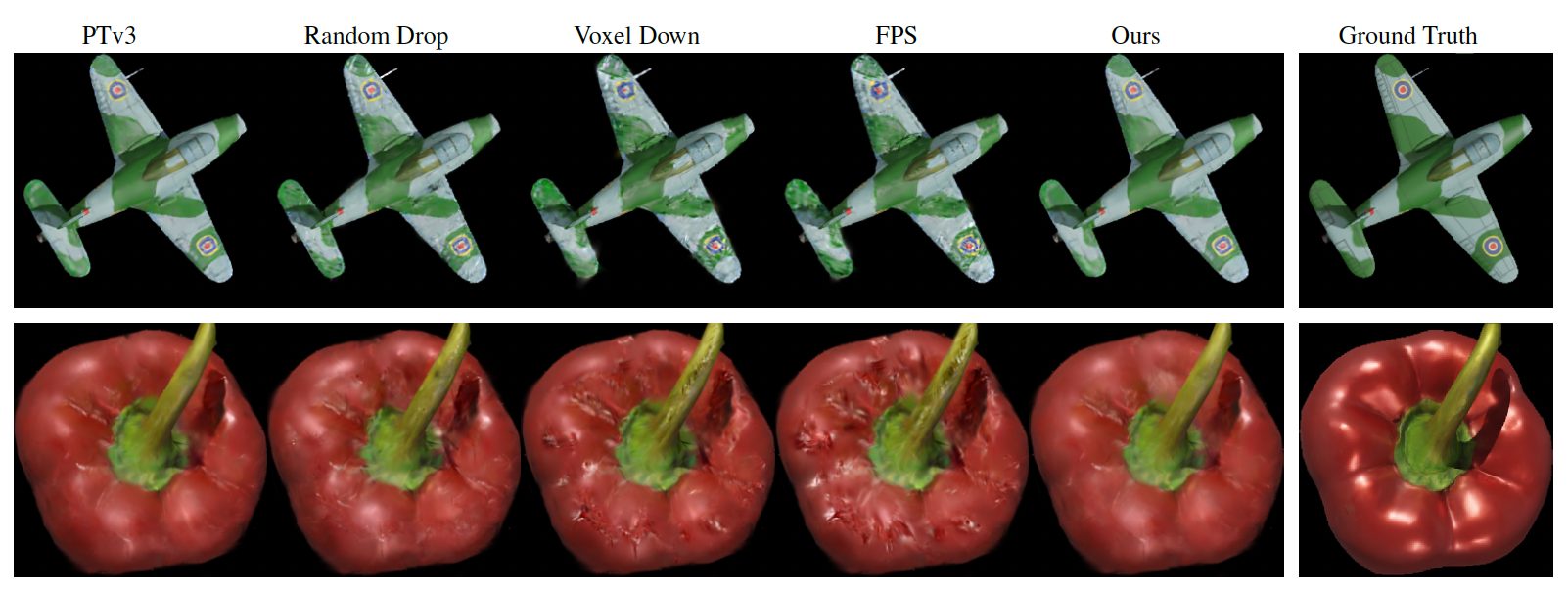

We visualize the output of various token compression techniques after removing 80% of the tokens, comparing their visual quality degradation (or preservation) on the 3D object reconstruction task

Video Presentation

Acknowledgement

This work was supported by Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany's Excellence Strategy - EXC 2075 – 390740016, the DARPA ANSR program under award FA8750-23-2-0004, the DARPA CODORD program under award HR00112590089. The authors thank the International Max Planck Research School for Intelligent Systems (IMPRS-IS) for supporting Duy M. H. Nguyen. Tuan Anh Tran, Duy M. H. Nguyen, Michael Barz and Daniel Sonntag are also supported by the No-IDLE project (BMBF, 01IW23002), the MASTER project (EU, 101093079), and the Endowed Chair of Applied Artificial Intelligence, Oldenburg University.

Citation

@inproceedings{gitmerge3d2025,

title={How Many Tokens Do 3D Point Cloud Transformer Architectures Really Need?},

author={Tuan Anh Tran and Duy Minh Ho Nguyen and Hoai-Chau Tran and Michael Barz and Khoa D. Doan and Roger Wattenhofer and Vien Anh Ngo and Mathias Niepert and Daniel Sonntag and Paul Swoboda},

booktitle={Advances in Neural Information Processing Systems},

year={2025}

}